|

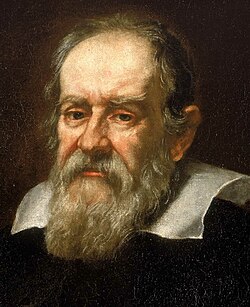

| Galileo Galilee |

Science is measuring.

Of course, it is about much more than measuring. The scientific approach includes deduction, induction, lateral thinking and all of the other creative and logistical mechanisms by which we arrive at ideas. But what distinguishes the ideas of science from those of religion, philosophy or art is that they are expressed as testable hypotheses – and by testable hypotheses, scientists mean ideas that can be examined by observations or experiments that yield outcomes that can be measured.

Earth scientists use astonishingly diverse approaches to measure our world, from the submolecular to the planetary, from bacterial syntrophic interactions to the movement of continental plates. A particularly important aspect of observing the Earth system involves chemical reactions – the underlying processes that form rocks, fill the oceans and sustain life. The Goldschmidt Conference, held this year in Florence, is the annual highlight of innovations in geochemical methodologies and the new knowledge emerging from them.

Geochemists reported advances in measuring the movement of electrons across nanowires, laid down by bacteria in soil like electricians lay down cables; the transitory release of toxic metals by microorganisms, daily emissions of methane from bogs, and annual emissions of carbon dioxide from the whole of the Earth; the history of life on Earth as recorded by the isotopes of rare metals archived in marine sediments; the chemical signatures in meteorites and the wavelengths of light emitted from distant solar nebulae, both helping us infer the building blocks from which our own planet was formed.

******

The Goldschmidt Conference is often held in cities with profound cultural legacies, like that of Florence. And although Florence’s legacy that is perhaps dominated by Michelangelo and Botticelli, Tuscany was also home to Galileo Galilee, and he and the Scientific Revolution are similarly linked to the Renaissance and Florence. Wandering through the Galileo Museum is a stunning reminder of how challenging it is to measure the world around us, how casually we take for granted many of these measurements and the ingenuity of those who first cracked the challenges of quantifying time or temperature or pressure.

And it is also exhilarating to imagine the thrill of those scientists as they developed new tools and turned them to the stars above us or the Earth beneath us. Galileo’s own words tell us how he felt when he pointed his telescope at Jupiter and discovered the satellites orbiting around it; and how those observations unlocked other insights and emboldened new hypotheses:

‘But what exceeds all wonders, I have discovered four new planets and observed their proper and particular motions, different among themselves and from the motions of all the other stars; and these new planets move about [Jupiter] like Venus and Mercury… move about the sun.’

The discoveries of the 21st century are no less exciting, if perhaps somewhat more nuanced.

******

The University of Bristol is one of the world leaders in the field of geochemistry. Laura Robinson co-chaired several sessions, while also presenting a new approach to estimating water discharge from rivers, based on the ratio of uranium isotopes in coral; the technique has great potential for studying flood and drought events over the past 100,000 years, helping us to better understand, for example, the behaviour of monsoon systems on which the lives of nearly one billion people depend. Heather Buss chaired a session and presented research quantifying the nature and consequences of reactions occurring at the bedrock-soil interface – and by extension, the processes by which rock becomes soil and nutrients are liberated, utilised by plants or flushed to the oceans. Kate Hendry, arriving at the University of Bristol in October, presented her latest work employing the distribution of zinc in sponges (trapped in their opal hard parts) to examine how organic matter is formed in surface oceans, then transported to the deep ocean and ultimately buried in sediments; this is a key aspect to understanding how carbon dioxide is ultimately removed from the atmosphere. The Conference is not entirely about measuring these processes – it is also about how those measurements are interpreted. This is exemplified by Andy Ridgwell who presented two keynote lectures on his integrated physical, chemical and biological model, with which he evaluated the evidence for how and when oceans become more acidic or devoid of oxygen.

What next? Every few years, a major innovation opens up new insights. Until about 20 years ago, organic carbon isotope measurements (carbon occurs as two stable isotopes – ~99% as the isotope with 12 nuclear particles and ~1% as the isotope with 13) were conducted almost exclusively on whole rock samples. These values were useful in studying ancient life and the global carbon cycle, but somewhat limited because the organic matter in a rock derives from numerous organisms including plants, algae and bacteria. But in the late 1980s, new methods allowed us to measure carbon isotope values on individual compounds within those rocks, including compounds derived from specific biological sources. Now, John Eiler and his team at Caltech are developing methods for measuring the values in specific parts or even at a single position in those individual compounds within those rocks. This isotope mapping of molecules could open up new avenues for determining the temperatures at which ancient animals grew or elide what microorganisms are doing deep in the Earth’s subsurface.

Scientists are going to continue to measure the world around us. And while that might sound cold and calculating, it is not! We do this out of our fascination and wonder for nature and our planet. Just like Galileo’s discovery of Jovian satellites excited our imagination of the cosmos, these new tools are helping us unravel the astonishingly beautiful interactions between our world and the life upon it.

This blog was written by Professor Rich Pancost, Cabot Institute Director, University of Bristol

|

| Prof Rich Pancost |

.JPG)