Pledges to cut greenhouse gas emissions are very welcome – but accurate monitoring across the globe is crucial if we are to meet targets and combat the devastating consequences of global warming.

During COP26 in Glasgow, many countries have set out their targets to reach net-zero by the middle of this century.

But a serious note of caution was raised in a report in the Washington Post. It revealed that many countries may be under-reporting their emissions, with a gap between actual emissions into the atmosphere and what is being reported to the UN.

This is clearly a problem: if we are uncertain about what we are emitting now, we will not know for certain that we have achieved our emission reduction targets in the future.

Quantifying emissions

Currently, countries must follow international guidelines when it comes to reporting emissions. These reports are based on “bottom-up” methods, in which national emissions are tallied up by combining measures of socioeconomic activity with estimates on the intensity of emissions involved in those activities. For example, if you know how many cows you have in your country and how much methane a typical cow produces, you can estimate the total methane emitted from all the cows.

There are internationally agreed guidelines that specify how this kind of accountancy should be done, and there is a system of cross-checking to ensure that the process is being followed appropriately.

But, according to the Washington Post article, there appear to be some unexpected differences in emissions being reported between similar countries.

The reporting expectations between countries are also considerably different. Developed countries must report detailed, comprehensive reports each year. But, acknowledging the administrative burden of this process, developing countries can currently report much more infrequently.

Plus, there are some noteable gaps in terms of what needs to be reported. For example, the potent greenhouse gases that were responsible for the depletion of the stratospheric ozone layer – such as chlorofluorocarbons (CFCs) – are not included.

A ‘top-down’ view from the atmosphere

To address these issues, scientists have been developing increasingly sophisticated techniques that use atmospheric greenhouse gas observations to keep track of emissions. This “top-down” view measures what is in the atmosphere, and then uses computer models to work backwards to figure out what must have been emitted upwind of the measurements.

To demonstrate the technique, an international team of scientists converged on Glasgow, to observe how carbon dioxide and methane has changed during the COP26 conference.

While this approach cannot provide the level of detail on emission sectors (such as cows, leaks from pipes, fossil fuels or cars) that the “bottom–up” methods attempt, scientists have demonstrated that it can show whether the overall inventory for a particular gas is accurate or not.

The UK was the first country, now one of three along with Switzerland and Australia, to routinely publish top-down emission estimates in its annual National Inventory Report to the United Nations.

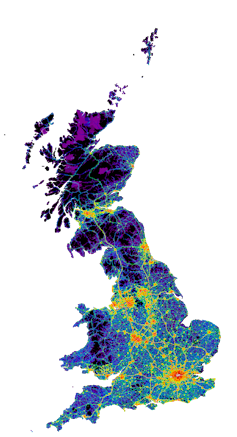

A network of five measurement sites around the UK and Ireland continuously monitors the levels of all the main greenhouse gases in the air using tall towers in rural regions.

Emissions are estimated from the measurements using computer models developed by the Met Office. And the results of this work have been extremely enlightening.

In a recent study, we showed that the reported downward trend in the UK’s methane emissions over the last decade is mirrored in the atmospheric data. But a large reported drop before 2010 is not, suggesting the methane emissions were over-estimated earlier in the record.

In another, we found that the UK had been over-estimating emissions of a potent greenhouse gas used in car air conditioners for many years. These studies are discussed with the UK inventory team and used to improve future inventories.

While there is currently no requirement for countries to use top-down methods as part of their reporting, the most recent guidelines and a new World Meteorological Organisation initiative advocate their use as best practice.

If we are to move from only three countries evaluating their emissions in this way, to a global system, there are a number of challenges that we would need to overcome.

Satellites may provide part of the solution. For carbon dioxide and methane, the two most important greenhouse gases, observations from space have been available for more than a decade. The technology has improved dramatically in this time, to the extent that imaging of some individual methane plumes is now possible from orbit.

In 2018, India, which does not have a national monitoring network, used these techniques to include a snapshot of its methane emissions in its report to the UN.

But satellites are unlikely to provide enough information alone.

To move towards a global emissions monitoring system, space-based and surface-based measurements will be required together. The cost to establish ground-based systems such as the UK’s will be somewhere between one million and tens of millions of dollars per country per year.

But that level of funding seems achievable when we consider that billions have been pledged for climate protection initiatives. So, if the outcome is more accurate emissions reporting, and a better understanding of how well we are meeting our emissions targets, such expenditure seems like excellent value for money.

It will be up to the UN and global leaders to ensure that the international systems of measurement and top-down emissions evaluation can be scaled-up to meet the demands of a monitoring system that is fit for purpose. Without robust emissions data from multiple sources, the accuracy of future claims of emission reductions may be called into question.![]()

————————-

This blog is written by Cabot Institute for the Environment member Professor Matt Rigby, Reader in Atmospheric Chemistry, University of Bristol

This article is republished from The Conversation under a Creative Commons license. Read the original article.