|

| Image source: Wikimedia Commons |

Caboteer Dr Colin Nolden blogs on a recent All-Party Parliamentary Rail & Climate Change Groups meeting on ‘Decarbonising the UK rail network’. The event was co-chaired by Martin Vickers MP and Daniel Zeichner MP. Speakers included:

- Professor Jim Skea, CBE, Imperial College London

- David Clarke, Technical Director, RIA

- Anthony Perret, Head of Sustainable Development, RSSB

- Helen McAllister, Head of Strategic Planning (Freight and National Passenger Operators), Network Rail

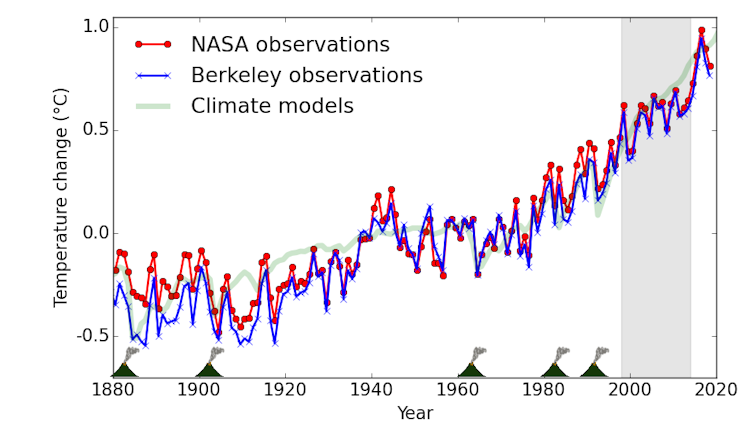

The meeting kicked off with a broad overview of the global decarbonisation challenge by Jim Skea. As former member of the UK’s Climate Change Committee and Co-chair of Working Group III of the Intergovernmental Panel on Climate Change, which oversaw the 1.5C report published in October 2018, as well member of the Scottish Just Transition Commissions, he emphasized that the net-zero target ‘is humongously challenging’. We need to recognise that all aspects of our land, economy and society require change, including lifestyles and behaviours. At the same time, the loophole of buying in permits to ‘offset’ decarbonisation in the UK net-zero target increases uncertainty as it is unclear what needs to be done territorially. The starting point for decarbonising mobility and many other sectors is nevertheless the decarbonisation of our electricity supply by 2030 as this allows the electrification of energy demand.

The recent International Energy Agency report on the ‘Future of Rail’ was mentioned. It suggests that the rail sector is one of the blindspots for decarbonisation although rail covers 8% of passenger transport, 7% of freight transport with only 2% of transport energy demand. The report concludes that a modal shift and sustainable electrification are necessary to decarbonise transport.

David Clarke pointed towards the difficulties encountered in the electrification of the Great Western line to Bristol and beyond to Cardiff but stressed that this was not a good measure for future electrification endeavours. Electrification was approached to ambitiously in 2009 following the 20-year electrification hiatus. Novel technology and deadlines with fixed time scales implied higher costs on the Great Western line. Current electrification phases such as the Bristol-Cardiff stretch, on the other hand, are being developed within the cost envelope. A problem now lies in the lack of further planned electrifications as there is a danger of demobilising relevant teams. Such a hiatus could once again lead to teething problems when electrification will be prioritised again. Bimodal trains that have accompanied electrification on the Great Western line will continue to play an important role in ongoing electrification as they allow at least part of the journeys to be completed free of fossil fuels.

Anthony Perret mentioned the RSSBs role in the ongoing development of a rail system decarbonisation strategy. The ‘what’ report was published in January 2019 and the ‘how’ report is still being drafted. Given that 70% of journeys and 80% of passenger kilometres are already electrified he suggested that new technology combinations such as hydrogen and battery will need to be tested to fill the gap where electrification is not economically viable. Hydrogen is likely to be a solution for longer distances and higher speeds while batteries are more likely to be suitable for discontinuous electrification such as the ‘bridging’ of bridges and tunnels. Freight transport’s 25,000V requirement currently implies either diesel or electrification to provide the necessary power. Anthony finished with a word of caution regarding rail governance complexities. Rail system governance needs an overhaul if it is not to hinder decarbonisation.

Helen McAllister is engaged in a task force to establish what funding needs to be made available for deliverable, affordable and efficient solutions. Particular interest lies on the ‘middle’ where full electrification is not economically viable but where promising combinations of technologies that Anthony mentioned might provide appropriate solutions. This is where emphasis on innovation will be placed and economic cases are sought. This is particularly relevant to the Riding Sunbeams project I am involved with as discontinuous and innovative electrification is one of the avenues we are pursuing. However, Helen highlighted failure of current analytical tools to take carbon emissions into account. The ‘Green Book’ requires revision to place more emphasis on environmental outcomes and to specify the ‘bang for your buck’ in terms of carbon to make it a driving factor in decision-making. At the same time, she suggested that busy commuter lines that are the obvious choice for electrification are also likely to score highest on decarbonisation.

David pointed out that despite ambitious targets in place, new diesel rolling stock that was ordered before decarbonisation took priority will only be put in service in 2020 and will in all likelihood continue running until 2050. This is an indication of the lock-in associated with durable rail assets that Jim Skea also strongly emphasized as a challenge to overcome. Transport for Wales, on the other hand, are already looking into progressive decarbonisation options, which include Riding Sunbeams, along with four other progressive decarbonisation projects currently being implemented. Helen agreed that diesel will continue to have a role to play but that franchise specification for rolling stock regarding passenger rail and commercial specification regarding freight rail can help move the retirement date forward.

Comments and questions from the audience suggest that the decarbonisation challenge is galvanising the industry with both rolling stock companies and manufacturers putting their weight behind progressive solutions. Ultimately, more capacity for rail is required to enable modal shift towards sustainable rail transport. In this context, Helen stressed the need to apply the same net-zero criteria across all industries to ensure that all sectors engage in the same challenge, ranging from aviation to railways. Leo Murray from Riding Sunbeams asked whether unelectrified railway lines into remote areas such as the Scottish Highlands, Mid-Wales and Cornwall could be electrified with overhead electricity transmission lines to transmit the power from such remote areas to urban centres with rail electrification as a by-product. Chair Danial Zeichner pointed towards a project that seeks to connect Calais and Folkstone with a thick DC cable through the channel tunnel and this is something we will follow up with some of the speakers.

In conclusion, Anthony pointed towards the Rail Carbon Tool which will help measure capital carbon involved in all projects above a certain size from January 2020 onwards as a step in the right direction. David pointed toward increasing collaboration with the advanced propulsion centre at Cranfield University to cross-fertilise innovative solutions across different mobility sectors.

Overall it was an intense yet enjoyable hour in a sticky room packed full of sustainable rail enthusiasts. Although this might evoke images of grey hair, ill-fitting suits and the odd trainspotting binoculars it was refreshing to see so many ideas and enthusiasm brought to fore by a topic as mundane as ‘decarbonising the UK rail network’.

——————————————-

This blog is written by Dr Colin Nolden, Vice-Chancellor’s Fellow, University of Bristol Law School and Cabot Institute for the Environment.

Colin is currently leading a new Cabot Institute Masters by Research project on a new energy system architecture. This project will involve close engagement with community energy organizations to assess technological and business model feasibility. Sound up your street? Find out more about this masters on our website.