JAN THIJS/AMAZON STUDIOS

Wheel of Time, the 14-book epic fantasy now turned into an Amazon Prime TV series, is a medieval-style adventure set in the Third Age of the World of the Wheel. While not explicit in the storyline, notes from the late author suggest that the First Age was actually modern-day Earth, which ended with a dramatic event (perhaps even climate change). From these notes, we estimate the show takes place around 18,000 years from today.

For climate scientists like us, this poses an interesting question: would today’s climate change still be experienced in the World of the Wheel, even after all those centuries?

About a quarter of carbon dioxide emitted today will remain in the atmosphere even 18,000 years from now. According to biogeochemistry models, carbon dioxide levels could be as high as 1,100 parts per million (ppm) at that point. That’s compared with a present-day value of 415ppm. This very high value assumes that the Paris climate goals will be exceeded and that many natural stores of carbon will also be released into the atmosphere (melting permafrost, for instance).

But the high carbon dioxide concentrations do not necessarily mean a warmer climate. That’s because, over such a long period, slow changes in the orbit and tilt of the planet become more important. This is known as the Milankovitch Cycle and each cycle lasts for around 100,000 years. Given that we are currently at the peak of such a cycle, the planet will naturally cool over the next 50,000 years and this is why scientists were once worried about a new ice age.

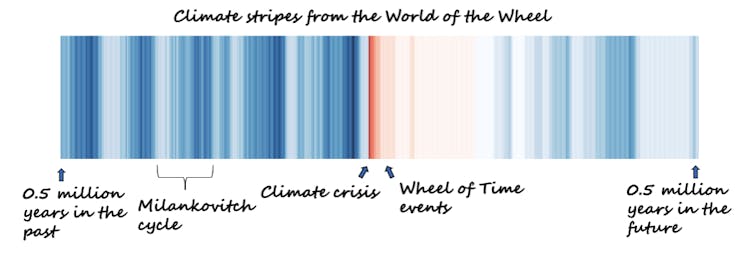

But will this be enough to offset the warming from the remaining carbon dioxide in the atmosphere? The image below shows a version of the classic warming stripes, a ubiquitous symbol of the past 150 years of climate change, but instead applied over 1 million years:

Authors modified from Dan Lunt et al, Author provided

You can clearly see the 100,000 year Milankovitch cycles. Anything red can be considered anthropogenic climate change, and the events of the Wheel of Time are well within this period. Even the descending Milankovitch cycle won’t be enough to counteract the increased warming from carbon dioxide, and so the inhabitants of the World of the Wheel would still experience elevated temperatures from a climate crisis that occurred 18,000 years ago.

Simulating the weather of the World

However, some of the weather changes from the still-elevated temperatures could be offset by other factors. Those 18,000 years aren’t very long from a geological perspective, so in normal circumstances the landmasses would not change significantly. However, in this fantasy future magical channelers “broke” the world at the end of the Second Age, creating several new supercontinents.

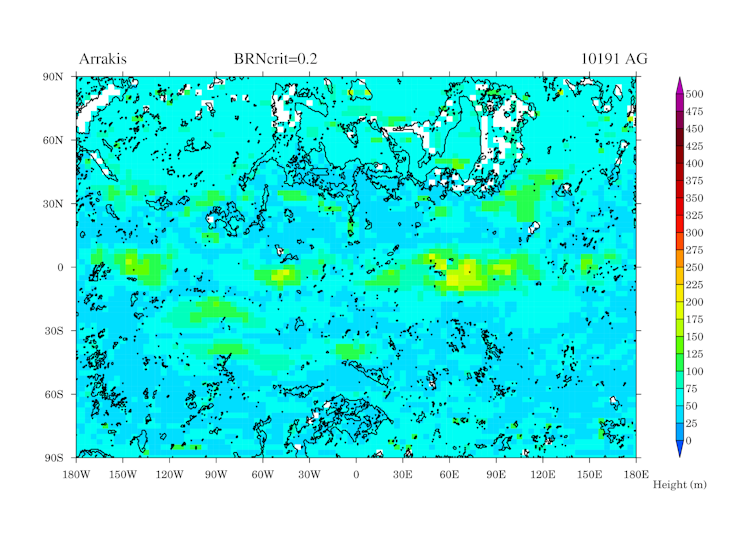

To find out how the climate would work in the World of the Wheel, we used an exoplanet model. This complex computer program uses fundamental principles of physics to simulate the weather patterns on the hypothetical future planet, once we had fed in its topography based on hand-drawn maps of the world, and carbon dioxide levels of 830ppm based on one of the high potential future carbon pathways.

According to our model, the World of the Wheel would be warm all over the surface, with temperatures over land never being cold enough for snow apart from on the mountains. No chance of a white Christmas in this future. Here the story and the science diverge, as at times snow is mentioned in the Wheel of Time. The long-term effects of climate change may have surpassed the imagination of its author, the late great Robert Jordan.

climatearchive.org, Author provided

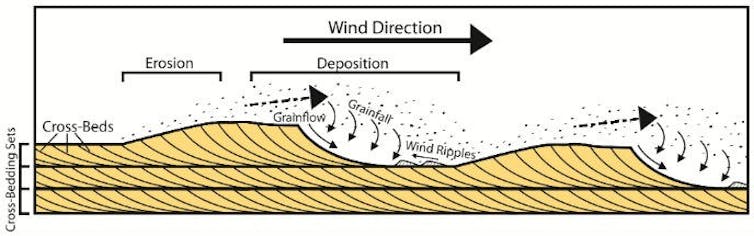

The World of the Wheel would have stronger and wavier high-altitude jet streams than modern-day Earth. This is likely because there are more mountain ranges in the World of the Wheel, which generate atmospheric waves called Rossby waves, causing oscillations in the jet. There is some limited evidence that the jet stream gets wavier with climate change as well, although this is likely to be less important than the mountain ranges. The jet would bring moisture from the western ocean on to land, and deposit it north of the Mountains of Dhoom. Surprising then, that this region (The Great Blight) is so desert-like in the books – perhaps there is some magic at play to explain this.

Winds would often revolve around two particularly enormous mountains, Dragonmount and Shayol Ghul, before blowing downslope and reaching far across the land masses. The peak of Dragonmount itself is nearly always surrounded by clouds, and this is because the mountain is so large the winds travelling up it force surface moisture to higher altitudes, thus cooling it, and forming clouds.

The fact winds would be so different from modern-day Earth is predominantly caused by topography, not the underlying increased temperatures from climate change. Nevertheless, in the World of the Wheel, it is clear that despite the extremely long time since carbon polluted the atmosphere, the inhabitants are still exposed to warmer than usual temperatures.

Acknowledging just how long the effects of climate change will persist for should be a catalyst for change. Yet, even after accepting the facts, we face psychological barriers to subsequent personal action, not least because comprehending the timescales of climate change requires a considerable degree of abstraction. But, given the known changes in extreme weather from climate change, and given how long these changes will remain, we must ask ourselves: how would the mysterious and powerful Aes Sedai stop the climate crisis?![]()

—————————

This blog is by Caboteers Professor Dann Mitchell, Professor of Climate Science, University of Bristol; Emily Ball, PhD Candidate, Climate Science, University of Bristol; Sebastian Steinig, Research Associate in Paleoclimate Modelling, University of Bristol; and Rebecca Áilish Atkinson, Research Fellow, Cognitive Psychology, University of Sussex.

This article is republished from The Conversation under a Creative Commons license. Read the original article.