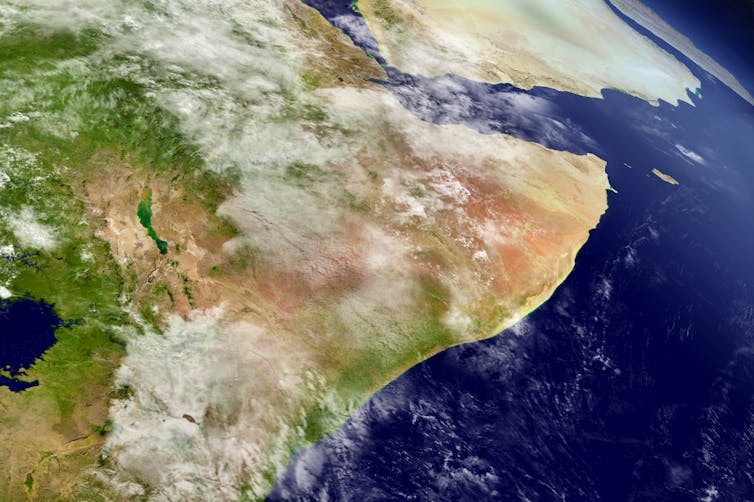

East Africa has recently had an unprecedented series of failed rains. But some rainy seasons are bringing the opposite: huge amounts of rainfall.

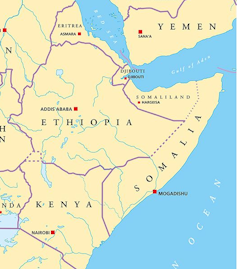

In the last few months of 2023, the rainy season known as the “short rains” was much wetter than normal. It brought severe flooding to Kenya, Somalia and Tanzania. In Somalia, more than 2 million people were affected, with over 100 killed and 750,000 displaced from their homes. Tens of thousands of people in northern Kenya lost livestock, farmland and homes.

The very wet short rainy seasons are linked to a climate event known as a positive Indian Ocean Dipole (known as the “IOD”). And climate model projections show an increasing trend of extreme Indian Ocean dipoles.

In a new research paper, we set out to investigate what effect more frequent extreme Indian Ocean Dipole events would have on rainfall in east Africa. We did this using a large number of climate simulations and models.

Our results show that they increase the likelihood of very wet days – therefore making very wet seasons.

This could lead to extreme weather events, even more extreme than the floods of 1997, which led to 10 million people requiring emergency assistance, or those of 2019, when hundreds of thousands were displaced.

We recommend that decision-makers plan for this kind of extreme rainfall, and the resulting devastating floods.

How the Indian Ocean Dipole works

Indian Ocean Dipole events tend to occur in the second half of the year, and can last for months. They have two phases: positive and negative.

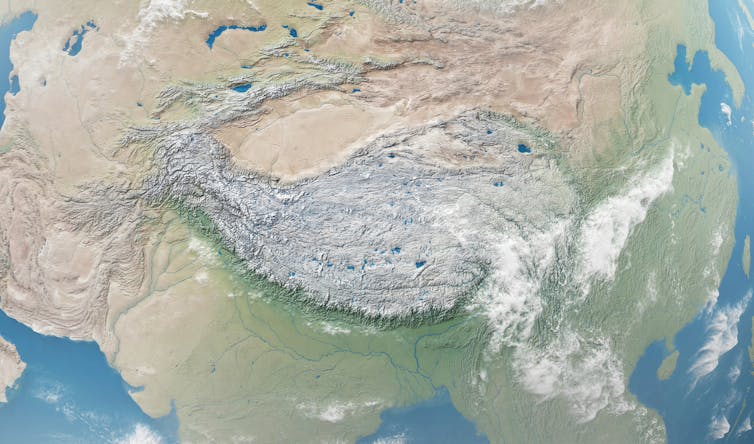

Positive events occur when the temperature of the sea surface in the western Indian Ocean is warmer than normal and the temperature in the eastern Indian Ocean is cooler than normal. Put simply, this temperature difference happens when winds move warmer water away from the ocean surface in the eastern region, allowing cooler water to rise.

In the warmer western Indian Ocean, more heated air will rise, along with water vapour. This forms clouds, bringing rain. Meanwhile, the eastern part of the Indian Ocean will be cooler and drier. This is why flooding in east Africa can happen at the same time as bushfires in Australia.

The opposite is true for negative dipole events: drier in the western Indian Ocean and wetter in the east.

Under climate change we’re expecting to see more frequent and more extreme positive dipole events – bigger differences between east and west. This is shown by climate model projections. They are believed to be driven by different paces of warming across the tropical Indian Ocean – with western and northern regions projected to warm faster than eastern parts.

Often heavy rain seasons in east Africa are attributed to El Niño, but recent research has shown that the direct impact of El Niño on east African rainfall is actually relatively modest. El Niño’s principal influence lies in its capacity to bring about positive dipole events. This occurs since El Niño events tend to cool the water in the western Pacific Ocean – around Indonesia – which also helps to cool down the water in the eastern Indian Ocean. These cooler temperatures then help kick-start a positive Indian Ocean Dipole.

Examining unprecedented events

Extreme positive Indian Ocean Dipole events are rare in the recent climate record. So to examine their potential impacts on rainfall extremes, we used a large set of climate simulations. The data allowed us to diagnose the sensitivity of rainfall to larger Indian Ocean Dipole events in a statistically robust way.

Our results show that as positive dipole events become more extreme, more wet days during the short rains season can be expected. This effect was found to be largest for the frequency of extremely wet days. Additionally, we found that as the dipole strength increases, the influence on the most extreme days becomes even larger. This means that dipole events which are even slightly “record-breaking” could lead to unprecedented levels of seasonal rainfall.

Ultimately, if positive Indian Ocean Dipole seasons increase in frequency, as predicted, regular seasons of flooding impacts will become a new normal.

One aspect not included in our analysis is the influence of a warmer atmosphere on rainfall extremes. A warmer atmosphere holds more moisture, allowing for the development of more intense rain storms. This effect could combine with the influence of extreme positive dipoles to bring unprecedented levels of rainfall to the Horn of Africa.

2023 was a year of record-breaking temperatures driven both by El Niño and global warming. We might expect that this warmer air could have intensified rain storms during the season. Indeed, evidence from a recent assessment suggests that climate change-driven warming is highly likely responsible for increased rainfall totals.

Responding to an unprecedented future

Policymakers need to plan for this.

In the long term it is crucial to ensure that any new infrastructure is robust to withstand more frequent and heavier rains, and that government, development and humanitarian actors have the capacity to respond to the challenges.

Better use of technology, such as innovations in disseminating satellite rainfall monitoring via mobile phones, can communicate immediate risk. New frontiers in AI-based weather prediction could improve the ability to anticipate localised rain storms, including initiatives focusing on eastern Africa specifically.

Linking rainfall information with hydrological models designed for dryland environments is also essential. These will help to translate weather forecasts into impact forecasts, such as identifying risks of flash flooding down normally dry channels or bank overflow of key rivers in drylands.

These technological improvements are crucial. But better use of the forecast information we already have can also make a big difference. For instance, initiatives like “forecast-based financing”, pioneered by the Red Cross Red Crescent movement, link forecast triggers to pre-approved financing and predefined action plans, helping communities protect themselves before hazards have even started.

For these endeavours to succeed, there must be dialogue between the science and practitioner communities. The scientific community can work with practitioners to integrate key insights into decisions, while practitioners can help to ensure research efforts target critical needs. With this, we can effectively build resilience to natural hazards and resist the increasing risks of our changing climate.![]()

———————————

This blog is written by David MacLeod, Lecturer in Climate Risk, Cardiff University; Erik W. Kolstad, Research professor, Uni Research; Cabot Institute for the Environment member Katerina Michaelides, Professor of Dryland Hydrology, School of Geographical Sciences, University of Bristol, and Michael Singer, Professor of Hydrology and Geomorphology, Cardiff University. This article is republished from The Conversation under a Creative Commons license. Read the original article.

.jpg/1280px-At_the_end_of_the_day_(18883025204).jpg)